Instance 1: A bank is being robbed. Everyone can see it. Employees try to close the gates, but the gates don’t move. Goes on for 7 days while the CEO pleads for the robbers to stop.

Instance 2: An e-commerce site prices its shoes in a strange currency. The exchange that feeds the forex rate is manipulated with a multi-million-dollar loan taken without collateral

Instance 3: Attacker steals funds and then returns half of it a few days later with a message claiming to have exploited for social good and exposing vulnerabilities

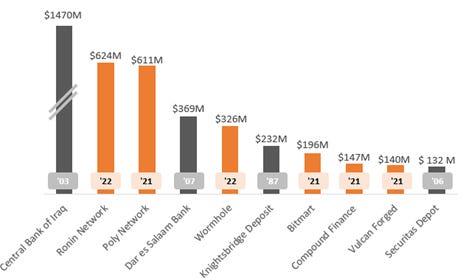

ABSURD? Well, it happens in DeFi. And not in pennies. In <18 months, crypto exploits now own more than half the leaderboard of recorded money heists spanning the 21st century.

Top money heists (2021 USD) contrasted with crypto heists since 2021

Source: Rekt leaderboard, Hdogar (Medium)

Web3 security is clearly a dire need of the hour. Unlike with web2 attacks, here the funds siphoned are directly a % of total value locked and hence can be quite massive. Building trust and safety is essential in onboarding the next billion users, and if left unsolved, web3 will remain a largely unfulfilled promise. And hence is also the opportunity to build several category leaders in the space. Before we get to that: how are these attacks even happening? Why are they so frequent? Are blockchains insecure?

What is security in web3?

I have come across articles on DeFi attacks titled “blockchains are not bulletproof” alluding that these involved corrupting a chain’s consensus mechanism. This is rather misleading; yes - 51% attacks on PoW chains (through hash rate rentals) or PoS chains (for early-stage low token-value chains) are technically feasible and critical to avoid, but these don’t amount to the billions in losses we’ve seen. Most attacks have been on dApps on Ethereum and the chain itself is very much battle tested by now.

So, what does security even mean? For the sake of this article – lets stick to DeFi but the topics are transferable across other web3 categories. There tends to be two categories of attacks in crypto/ DeFi: 1) deception by scams/ rogue protocols, 2) involvement in a protocol exploit. While funds lost in the first category are massive, this often stems from user literacy and will naturally decline (avg. lifetime of scams has declined by 35x since 2013 per Chainalysis). The latter is more systemic and requires structural solutions and hence is the focus of this article. I will nonetheless start with a quick tour of the first category to make the distinction clear.

The less cool way to get rekt

Vitalik Buterin is not asking for ETH donations. And is certainly not giving 2 ETH back for every 1 ETH sent to him. But some folks seem to believe. Crypto contributions to fake celebrity accounts, illicit mining pools, fraudulent brokers, Ponzi schemes, etc. accounted for $4.8 billion in 2021! But let us be clear – there is not web3 specific. These schemes have existed in the TradFi universe for ages and crypto is only a means of payment (that said, crypto does amplify the impact through its global payment rails and synonymy with “get rich quick”).

Typical celebrity giveaway - BEWARE

Source: somewhere on the internet

A crypto-native addition to scams has been “rug pulls” that soared to fame in 2021 accounting for 37% of scam revenue. These are typically DeFi projects that unlike vanilla scams (which only set up a wallet) also offer a token with supposed utility (e.g., unlocking game levels with the Squid Token). The token is heavily pumped through social media initiatives and then the founders exit – either by draining the liquidity pool of the token pair (on a DEX) or simply dumping the token in large strokes. Some devious tokens may also limit the addresses that have sell-rights on the token or require holding another paired token to be able to sell. Structurally rug-pulls are possible because of the trivial cost and effort involved in setting up a new token and the lack of any code audits/ KYC checks before the listing of a token on a DEX (decentralized exchange e.g., Uniswap).

SQUID token crashing by 99.98% in 5 minutes – classic “rug pull”

Source: CoinMarketCap

Let me re-affirm though that the above transactions performed by the users were indeed voluntary and it is likely that a reasonable share of buyers of these obscure tokens understand the risk but still engage because they believe they can time the “pump and dump” better than other naïve investors. Of course, this is not universally applicable and notwithstanding, it’s important to drive user literacy and for DEXs to provide risk scoring on their tokens (identifiable to an extent through shallow liquidity, anonymous profiles, lack of/ substandard white paper, etc.). Certainly, projects must also try to prevent individual rogue developers/ founders from acting against their users using a combination of multi-sigs (which require a minimum vote threshold for approving certain actions – more on this later) and could consider time-locks (that creates a delay between proposal and execution of a process).

Aside from voluntary actions, users may also be subject to malware that can outright steal private keys or utilize their computing resources by deploying benign cryptocurrency mining software. At an individual user level, there is nothing web3 about this – users need to take precautionary measures already standard with web2.

Alright, with that I want to switch gears to the focus of the article: protocol exploits.

The wild west of financial crime

In my mind, attacks here fall in five broad buckets, the first two are off chain attacks, and the rest are on-chain vectors or crypto-native.

1. Private key theft

Compromised private keys are the ultimate nemesis for a protocol and are particularly concerning when there is centralization – for example, access to protocol’s locked funds/ treasury or privileged rights to deploy/ modify contracts (sometimes these may be well-intentioned to enable quick response to external events but are a risk nonetheless). Ronin’s $625M exploit – the largest DeFi exploit in history – fits here (we’ll take more about that later). Consider EasyFi’s loss of $59M due to the compromised mnemonic phrase of the CEO’s Metamask wallet, or bZx’s draining of $55M when one of their developers was subject to an email-based phishing attack that stole their keys. These show that all the traditional phishing vectors from clipboard hijackers to trojans need to be protected against.

Note that it’s not just official accounts that matter; it's important for accounts that hold a large share of a token’s circulating supply to stay protected as well. As we saw with Nexus Mutual, when the CEO’s personal wallet was compromised, the token still crashed 14% given the fear of a wholesale dump.

2. Front end attacks

Every dApp provides a front-end interface to interact with it’s smart contract and these are exposed to well-known web2 attack vectors. The most serious of these include Cross Site Scripting - XSS (i.e., JavaScript injection that can modify the HTML through the browser’s DOM API to hijack the dApp’s interaction events and read the session details/ private keys even if held in local storage) and Cross Site Request Forgery - CSRF (wherein a dApp running in the background is made to perform restricted action by a malicious app that used the dApp’s session cookies to inject malicious script). XSS has long been among the top 10 of OWASP’s (Open Web Application Security Project) for recognized risks but the XSS attack on BadgerDAO shows how that decades of fine-tuning solutions to address these vectors have not been adopted seriously by web3 engineers and auditors who have prioritized smart contract (backed) exploits. Here, the attacker used a compromised Cloudflare Worker API to inject a script on the front-end that intercepted web3 transactions and asked for approval to their own address. Must note here that the dApp’s UI is not the only way to interact with the underlying contracts; the dApp has to make a JSON RPC call on a node and an attacker might directly pass malicious input in this call, thereby necessitating input validation (parsing the string and allowing selected characters) within the contract itself => this costs gas though and developers may make trade-offs.

Speaking of nodes, it’s now established that we have 3-4 large, centralized providers and they do present concentrated points for attack – say by applying unauthorized changes to the RPC call. We’ve now already seen how Domain Name Servers (that map domains to IP addresses) maintained by centralized organizations can be hacked; Cream Finance and PancakeSwap underwent DNS attacks where its GoDaddy (domain registrar) account was compromised, resulting in redirection of its domain name to a malicious phishing website. Luckily no funds were lost here, but it shows the wide extent of the attack surface.

3. Smart contract exploits

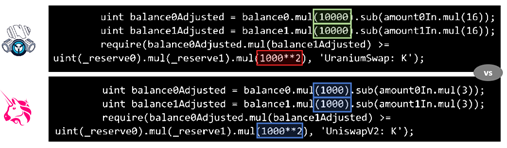

Code is law with smart contracts and if the law has loopholes, attackers will make use of it. Sometimes these issues can be simplistic as with Treasure DAO (an NFT marketplace on Arbitrum) that was drained of ~$1.5M last month by an exploiter who realized the code did not check for order quantity to be non-zero and could therefore syphon NFTs without paying anything – very amateur. Another such naïve example is shown below with Uranium Finance. Typically, though, attack vectors can be quite in the weeds. Before we double click, I want to point out that attacks don’t necessarily mean stealing funds, it could be other adverse impact as well – say Denial of Service attacks where the exploiter stalls the operations.

Uranium Finance, BSC DEX, exploited $57M due to improper forking from Uniswap

Source: Rekt

Let me walk through perhaps the most historic attack vector – reentrancy. Such an attack on The DAO (a crowdfunding DAO) resulted in a hard fork of Ethereum back in 2016, after ~3.6 million ETH were siphoned and the Ethereum community came together to protect the chain in its early days. With this attack, a vulnerable contract calls an external contract say to transfer funds. Once called, the external contract takes control, and the logic flows down to its default fallback function which could be malicious, wherein it could call the withdraw function in the vulnerable contract in a recursive manner before the first invocation of the withdraw function is completed. This vector is exposed when the state variables are not reset before calling the external contract but only after, which allows the subsequent function invocations to succeed. Though this vector is well known, it’s variants are still quite popular – Grim Finance ($30M lost) and Cream Finance ($23M lost) are examples from recent months. Aside from reentrancy, there are plenty of idiosyncrasies with Solidity that can lead to other issues – including integer overflow/ underflow (e.g., for unsigned int variable type: 2^256 + 1 = 0 or 0 – 1 = 2^256 !!!; scenarios are possible where the token supply maybe greater than the allowable size of a liquidity pool) and peculiarities due to DelegateCall which is a way of calling a function within the current function’s context (seen with Furucombo where its proxy contract was fooled to think the Aave building block it used had a new version). These attacks highlight that the composability DeFi celebrates, also amplifies the attack surface and third-party interactions must be carefully whitelisted. Below is a longer list of known attacks compiled in a recent IEEE paper.

Known vulnerabilities with Solidity

Source: Lee et al. (IEEE Access, 2022)

And then come logic bugs ☠ One may think that well maybe these protocols aren’t sticking to established standards like ERC-20 but these are less of standards and more of guidelines. They require that certain functions like transferFrom(), balanceOf() be present, but the implementations can vary widely, only partly for innovation. Here are some dimensions:

Not all tokens have 18 decimals. WBTC has 8, cTokens have 8, USDC has 6

Token balance (for non-native assets) are held within contracts meaning asset balance may not be definitive - they can be blacklisted or paused (as with USDT/ USDC), some can be rebased i.e., periodically updated (as with Aave’s aTokens that pay out interest). Attacks of Origin Protocol, YAM, etc. originated from the rebasing issue

Internal accounting for transfers may vary, e.g., value transferred may not be equal to amount received due to protocol fees (USDT has a function that could enforce this) wherein the token is burned reducing supply. The attack of Balancer pool through STA tokens stemmed from this.

A sample case: bZx

I’ve typically found it helpful to take a real example to understand how this plays out. Let’s take a rather simple one – bZx’s 71 ETH flash loan attack. They got exploited four separate times over a year or so – hence have to tell you which one 🤷♂️

Side bar: For readers not aware of flash loans, these are uncollateralized loans that can be drawn without identity/ credit checks, with the requirement that the funds be returned within the same transaction, else a return to the pre-loan state is effected (flash mints exist too! - you can mint your own tokens as long as you burn them within the same transaction). Aave, the protocol that pioneered these loans, has issued over $4B in flash loans (as of June last year) and regularly does over $100M issuances per day! Flash loans are a genius DeFi primitive, and nothing is inherently vulnerable about them – they enable vault collateral swaps, closing out collateralized debt positions, large scale arbitrage driving market efficiency, etc. But they can also be used by adversarial actors. If this the first time you’ve read about flash loans, you will need a tea break - we can resume afterwards ☕

Walkthrough of bZx’s 71 ETH attack

Source: Peckshield, Rekt

bZx is a margin trading and lending protocol. If you follow the steps in the table, you will see that the essence of the attack was in forcing bZx to make the doomed trade of 5.6k ETH for 51.3 wBTC at an exchange rate of ~3x prevailing market rate. Slippage does happen and to prevent these extremes, the contract indeed has a function that checks if the user has posted enough collateral – due to a logic bug that function was never called. Unfortunate. The reason I took this example was to show that flash loans don’t always refer to pure economic attacks as detailed in the next section; they can also be merely used to amplify the impact of a logic bug.

4. Pure economic manipulation (non-bug attacks)

Sometimes attacks don’t stem from issues with a protocol’s code. The mechanics of the underlying blockchain can be abused such as through transaction ordering within the block (check out MEV if interested) but while these constitute risks to an end-user, they rarely pose wholesale risks at a protocol level. Beyond this, it may sometimes be possible to orchestrate a complex set of financial maneuvers (often involving novel DeFi primitives) that can a protocol at risk. The most common in this category is “oracle manipulation” – two words that have tired and vexed many in DeFi. And yes, they involve flash loans.

How does this work? Protocols often need a data feed on asset price for their operations and seek these from external sources called oracles and DEXs can serve this purpose. Given AMM design, significant price slippage can result from a flash loan interacting with a liquidity pool and these prices can be used to say, trick a lending protocol that the deposited collateral is worth much more and hence be able to siphon a larger loan than allowable per the desired collateralization ratio. This issue was quite the rage until late 20s but now solutions like TWAP (time weighted average price) oracles have been widely adopted. Even if the problem isn’t in the code, the solution can be in the code! That said, DeFi innovates faster than its security plugs. What would you do if your synthetic asset has no 3rd party oracle, and you need a custom oracle? Such was the root cause of the $136M CREAM finance hack. As you’ll see in the diagram below – the attacks can be really sophisticated.

Anatomy of CREAM finance’s $136M exploit

Source: Merkle Science, Andy Pavia

5. Governance attacks

Moving from contract vulnerabilities, adversaries can acquire a large amount of a protocol’s governance tokens to direct decisions in their favor. Are these really attacks? Now, we might agree that if the tokens controlled are only temporarily held (e.g., through a flash loan) this is an exploit and governor contracts need to have guardrails that could say check token balance from a previous block. But what if a significant share of circulating supply is simply bought out – this is basically a hostile takeover situation, and not really an exploit. In fact, when Compound was subject to this vector, the founder seemed quite okay with it – see his tweet below. In this instance, the proposal made was not malicious, merely self-benefitting. Voting escrows, introduced by Curve, address this issue head-on.

Reaction from Compound’s founder on an adversarial governance proposal

Source: somewhere on Twitter

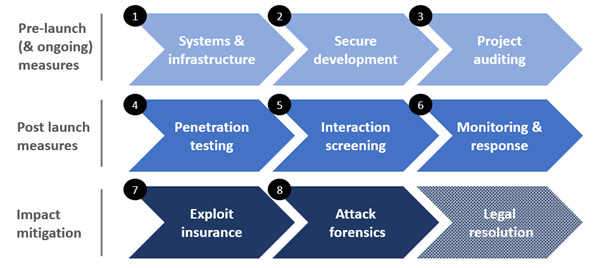

Bolstering web3 security

Alright, we’ve defined web3 security. Attacks come in various shapes and novel forms, and while the web2 attack vectors are applicable here as well, we’ve seen there are plenty of issues native to the space. So, how can a project protect itself from this onslaught? A whole suite of systems/ dev tools is required at every phase of the project’s journey from the design phase to launch and beyond. Let’s dive in.

Getting things right from day one

Systems & infrastructure

Centralization is not some philosophical nemesis of DeFi – it very practically is. Starting with governance, DAOs (with appropriate protections) prevent nefarious insider actions and single points of failure. However, solving for higher execution pace and a desire to keep a few mission-critical functions controllable by a core team (e.g., Yearn’s two-layer model), multi-sigs like Gnosis-Safe (that require ‘4 of 7’ or ‘5 of 9’ validators to vote) are a pretty good option. Certainly, better than holding a protocol’s treasury in Metamask as a few have done! But as the recent Ronin bridge hack ($625M siphoned – largest DeFi exploit ever) has shown, the multi-sig must also be implemented in a secure, decentralized manner. Here for example, Sky Mavis (the developer) held 4 of 9 keys and one of the other validators had shared their key with Sky Mavis for quicker decision making. Though the key was recalled later, it wasn’t erased from the servers, meaning that a hack of Sky Mavis’ servers gave full control of the multi-sig. Very unfortunate! In general, the multi-sig is only as secure as its validators and checks must be in place to ensure signers are logically isolated, security policies are updated when signer goes offline, etc.

The buck doesn’t stop with governance. A typical project utilizes several external services – e.g., node providers, storage systems, domain name providers. Centralized service providers do offer a high quality of service and while projects might still decide (and logically so) to opt for these, they must be aware of the trade-offs involved. Going back to the first example of CREAM Finance, their reliance on GoDaddy for their domain name listing created the attack vector, and since then, CREAM has migrated to the Ethereum Name Service (ENS) and deployed a decentralized front end on IPFS. Similarly, there’s a strong trend for NFT projects to utilize Arweave for immutable, permanent storage. On the nodes side, incumbents do have phenomenal customer satisfaction, but the community is cognizant of Infura’s unintended restricting of Venezuelan users and increasingly embracing decentralized alternatives like Pocket Network (that grew 100x in API calls in the last year).

Secure development

Most DeFi projects are scrappy teams hurrying to push out a project and literally, never have in-house security expert. In fact, some of the devs may have recently transitioned from web2 and may be playing around with Solidity for the first time in their lives. How can we save them?

Starts with smart contract libraries that have already been vetted to address common vulnerabilities with token standards like ERC-20/ ERC-721 – OpenZeppelin has done a phenomenal job at this. When building a complex contract though, this is simply not sufficient. Say, in the spirit of composability, a developer wants to leverage 3rd party functions from another project, how would they ensure that’s secure? Or even if the source code on GitHub is consistent with the deployed bytecode? (check out Sourcify).

All right. So, the code’s pulled together – time to unit test. There are plenty of testing tools/ environments/ test nets and scope for improvement, but given the focus on security vulnerabilities, let’s assume this gets done. However, narrowly testing for use cases isn’t quite enough given the very wide operating conditions for these protocols. Is there scope for automation? “Symbolic Execution” (e.g., with Oyente, Manticore, Mythril) allows a program to take symbolic inputs, and for each explored control path, generate a symbolic constraint that can later be solved to identify concrete values that result in a vulnerability. The technique faces challenges when such constraints are too complex, when the state space explodes (say due to program loops) and in tracing external function calls. “Fuzzing” (e.g., with Harvey, Echidna) is another technique that can test deep down the execution tree by using a massive set of randomized input cases but has a coverage issue. Here the contract is annotated (in a language like Scribble) with a set of mathematical requirements (e.g., sum of all balance = total supply) and when compiled, the tool checks whether the properties hold. These tools can often be used in conjunction – e.g., MythX offers a paid subscription to a suite of these tools – Mythril (SE) + Harvey (fuzzer) + static analysis tools discussed below.

But hold on – that’s still not complete execution coverage. Clearly there have been other mission critical systems outside DeFi – aircraft, emergency power generators etc. – surely, we mustn’t be missing edge cases. Enter formal verification – these are mathematical methods that can offer rigorous guarantees on the operational behavior of contracts.

While these methods are in some sense, ultimate at least from a build perspective, is formal verification. Only a few blockchains (like Cardano) were designed to be complaint with formal verification - Ethereum was clearly not. Notwithstanding, a few tools have come about – like SMTchecker, Verisol (by Microsoft) that works on Solidity and Certora’s AEV that works directly on the EVM bytecode which I think is stronger as it addresses implementation errors as well. Some ambitious teams have gone whole hog in proposing entirely new programming language replacements for Solidity, such as CertiKs DeepSEA that is compliant with existing formal proof assistants; or intermediate languages (between Solidity and bytecode) such as Scilla. Yet others have even proposed modified EVMs with formal semantics (e.g., KEVM – operates 30 times slower than EVM). Almost all this category is high intensity R&D.

Project auditing

Auditing != Unit testing. In fact, firms require the code to well-documented and tested, so that they can focus on the security vulnerabilities.

With exploits happening at an undigestible scale, it’s a no-brainer that “Security Auditors” are among the hottest roles out on Doge Street, easily commanding over $300k salaries. That has two implications, there’s a long queue (several months) to get audited by the top shops (say Open Zeppelin, Trail of Bits, Quantstamp) and it’s expensive. I’ve now even heard of crypto native VC firms investing into auditors to gain preferential access – such is their demand. This means sloppy projects can choose to skip external auditing until they reach some scale, and DEXs not requiring such audits to be in place exacerbates this issue. That said, being audited by a top shop is considered a strong branding signal.

Auditors aspire to be holistic in their coverage – including smart contracts, network configurations, financial structures, governance systems. And hence it’s a customized and a people-centric business with sparse automation. Many of them have now started using static/ dynamic code analyzers, fuzzers and other tools mentioned earlier, and a few like Consensys have developed/ rolled up proprietary tools (such as MythX mentioned earlier). I’ve been thinking what a good productivity metric might be to compare auditing firms – perhaps “manpower cost”/ “gas consumption of contract audited”.

A common pitfall here is shoving the audit to a dusty shelf. Auditors can even engage in a collaborative manner with the dev team to address the vulnerabilities identified. However, this does not always happen, and the industry standard is for auditors to openly publish their findings for public awareness.

Now, the project is up and running – how to protect in run-time?

Penetration testing

Another key issue with an audit is that it is “a point in time” solution and could evolve to a checklist-type model as the incentive structure for the auditors does not fully promote creative inspection for potential vulnerabilities. Similar to the evolution of crowdsourced pen testing in the web2 world with HackerOne, Synack, etc., there is massive potential for bug bounty programs in web3 as well. While some projects offer their own programs, white hats are a scarce resource and sourcing, managing them can be an unnecessary effort vs paying a platform margin. Immunefi as an initial mover in the space has been phenomenally successful and now we’re seeing web2 companies moving in as well.

I believe such platforms can be even more successful in web3 because the financial risk is immediate and quantifiable which leads to the potential for bounties to be negotiated as a % of value at risk, compared to the typical $1-2k web 2 pay out (bear in mind that the chance of finding a bug is quite low, and hence the rewards need to be substantial to warrant the opportunity cost of time spent). Adding to this, most of web3 code is open source anyway and the sales process does not involve asking players to expose their codebase. And further, there’s no for NDAs given its public anyway – I would be interested to see NFTs issued for such bounties that can then become part of an on-chain resume.

Interaction screening

If you’re a DeFi native, you’ll probably not read beyond this point (psst – that’s why its at the end). It’s a simple question – can we reap the benefits of decentralization – permissionless access, near-immediate finality, one truly global system, while also placing guardrails on it? Yes, we can. Addresses involved in past exploits, those that received significant amounts from fund mixers, those demonstrating suspicious hopping, etc. can be flagged through an oracle and protocols could take decisions either in a programmed or governance driven format to blacklist such addressed from interacting with their protocols. Uniswap has contracted TRM Labs to classify high risk addresses interacting with the project and I expect to see a lot more adoption on this front.

Going a step further, if at all there were close to panacea for web3 security, it will be from identity disclosure. Identity also solves for other concerns – money laundering, sanctions violations, etc. and has a pan-community benefit of enabling credit scoring (and hence personalized offerings) across DeFi, gaming, etc. But is exposing identity antithetical to web3? When I heard a company present an identity solution at ETHDenver, someone from the audience screamed “this makes my blood boil” – the community values anonymity. Can there be an identity solution that is private, verifiable, and permissionless – players like Spruce ID, Polygon ID are tackling these. This topic warrants its own article.

Monitoring and response

Attacks often happen across multiple blocks and there may even be leading on-chain signals or even off-chain precursors. It turns out that a host of such early-warning signals are helpful for projects to ideally prevent the attack or still beneficial, reduce the value of the exploit. These signals could be at a blockchain level, protocol specific or even concern its dependencies. Let’s take an example of say a lending protocol - they would want to know when a Tornado cash funded address has deployed a contract (chain level), whether their collateralization ratio has abruptly changed (protocol level), whether a significant share of deposits is attributable to another protocol (dependencies). And there’s a lot to monitor too – from financials, liquidity to data feeds, governance, etc. Forta Protocol, spun off from OpenZeppelin last year, is pioneering this category. (Disclaimer: I worked with them earlier this year! Super bullish)

But wait, what to do with these signals? This is not a straight question to answer. DAO governance processes might be too slow for emergency response and multi-sigs are best suited (ideally as a layered model). Adding to the pace issue, some projects institute time-locks on their contracts that create a delay function to prevent malicious actors or unfavorable decisions to impact (unwilling) DAO participants. This time-lock can indeed be a severe double-edged sword during security lapses, as we saw with the classic Compound Finance exploit where the community sat and watched for three days as its comptroller contract was drained. Another sustainable approach could be to automate the response (e.g., using Gelato) to take a specific action when the alert crosses a threshold intensity – don’t think anyone is doing this yet.

Before I move on, I want to highlight the importance of these monitoring solutions as part of a full stack security setup. If we were to observe the timing of DeFi attacks, they often come about after some form of contract upgrade. Take Yearn Finance which briefly turned off its withdrawal fee of 0.5% during a vault migration. The hawks were watching and struck with a flash loan attack, draining $11m from the vault. While projects are likely to utilize auditors after major upgrades, it is not practical to do so for smaller maintenance activities. In these cases, monitoring solutions can save the day.

Project tried a bunch and still got rekt – can nothing be done about it?

My focus is on the upfront security solutions in this article and hence I won’t delve into detail here.

Exploit insurance

I will say though that with <5% of DeFi TVL insured, there is a massive untapped opportunity. Several exploited projects have in fact chosen to compensate for their user’s losses; for example, Jump Capital provided over $320M to Wormhole to make its users whole and restore trust. Clearly, these indicate scope for both B2B and B2C exploit insurance. Nexus Mutual was an early mover in the space, and emergent automated markets like Risk Harbor are also very interesting. While these players cover smart contract exploits, they often leave out key theft. To fill these gaps, it would be interesting to see “Coalition”-type models that roll up a security offering with insurance. I would also be curious to see new blue-chip DEXs that curate only tokens that come with such user protections.

Attack forensics & Legal resolution

Given the transparency of current blockchains (several privacy-oriented chains are being built) is that fund flow can be accurately and indefinitely tracked as it moves between addresses (of course, fund mixers do obstruct this) and forensic tools like Chainalysis, TRM Labs have established their forte here. Note that the funds finally do need an off-ramp from the chain, and it is getting increasingly hard to launder large sums of crypto. Using forensic tools, regulators have also clamped down on some infamous attackers such as from the Bitfinex attack (stolen BTC now worth $3.6B) – this was indeed a landmark moment for web3 security. While waiting for the justice system to act, projects can also take a proactive stance in using the wallet screening services mentioned earlier to limit exploiter fund movements.

Across the previous section, I largely covered how projects can protect themselves. Other stakeholders need solutions too! Retail, institutional investors or DAOs, who are exposed to these projects will want upfront security flags, monitoring solutions, hedging/ automated response strategies, etc. Governments/ regulators don’t just need forensic solutions to hunt down criminals but will likely also want a central cockpit to keep a tab on projects that become intertwined with TradFi (e.g., stablecoins), likely venues for laundering (e.g., mixers, wrappers). In fact, I think there’ll also be a time not too far off where regulators will impose BASEL type controls on DeFi in terms of reserve ratios, rehypothecation, etc. and will need sophisticated stress testing tools (Gauntlet that raised its series B recently is a very interesting player in this regard).

If any of the above resonates with you and gives you an idea to build, I would suggest you challenge yourself with these five questions:

does the solution only cater to a temporary dislocation/ short term curable attack vector?

what is the feasibility (cost/ effort) to adapt to new attack vectors?

to what degree can the business be productized vs custom designed for each client?

does the outcome of your solution depend on externalities that you cannot control?

what if an exploit does happen – what’s the impact on brand, value proposition, profitability?

As Mark Cuban mentioned in a recent talk, the cost of setting up a web3 business is extraordinarily low, and in my view, we are thus seeing a democratization of company building. And it is this pace of innovation that has the tendency to shoot itself in its foot when not designed and managed well. The last thing any of us want is to be rekt and stop this revolution before it flies.

This topic is particularly technical and I don’t come from a computer science background and hence picked up much of the content along the way. In case there are technical glitches, do let me know - always happy to learn. Also, I have tried to be expansive in my citations but always possible that I missed someone - thanks to all!

References

Biggest bank robberies - Moneywise

dApp frontend security - Embark Labs

XSS with smart contracts - Consensys Diligence

SmartScan: An approach to detect Denial of Service Vulnerability in Ethereum Smart Contracts – Alalfi et al. (ICSEW, May 2021)

Systematic Review of Security Vulnerabilities in Ethereum Blockchain Smart Contract – Lee et al. (IEEE Access, Jan 2022)

bZx exploit analysis - Peckshield

Yearn Finance exploit analysis - Smart Contract Research

CREAM finance post mortem - Andy Pavia

Axie Infinity multisig attack - Lossless Cash